Communication and Interaction

We were working on the application of robot auditory technology to human-robot communication, such as a robot that listens to music and interacts adaptively with people, a robot that can distinguish the surrounding sound environment while suppressing its own ego-noise, and a barge-in-able robot that can continue a conversation with a user while the robot itself is talking.

In April 2021, according to the change of the lab’s name, we start new research themes for future applications in communication and interaction by further developing the existing technologies.

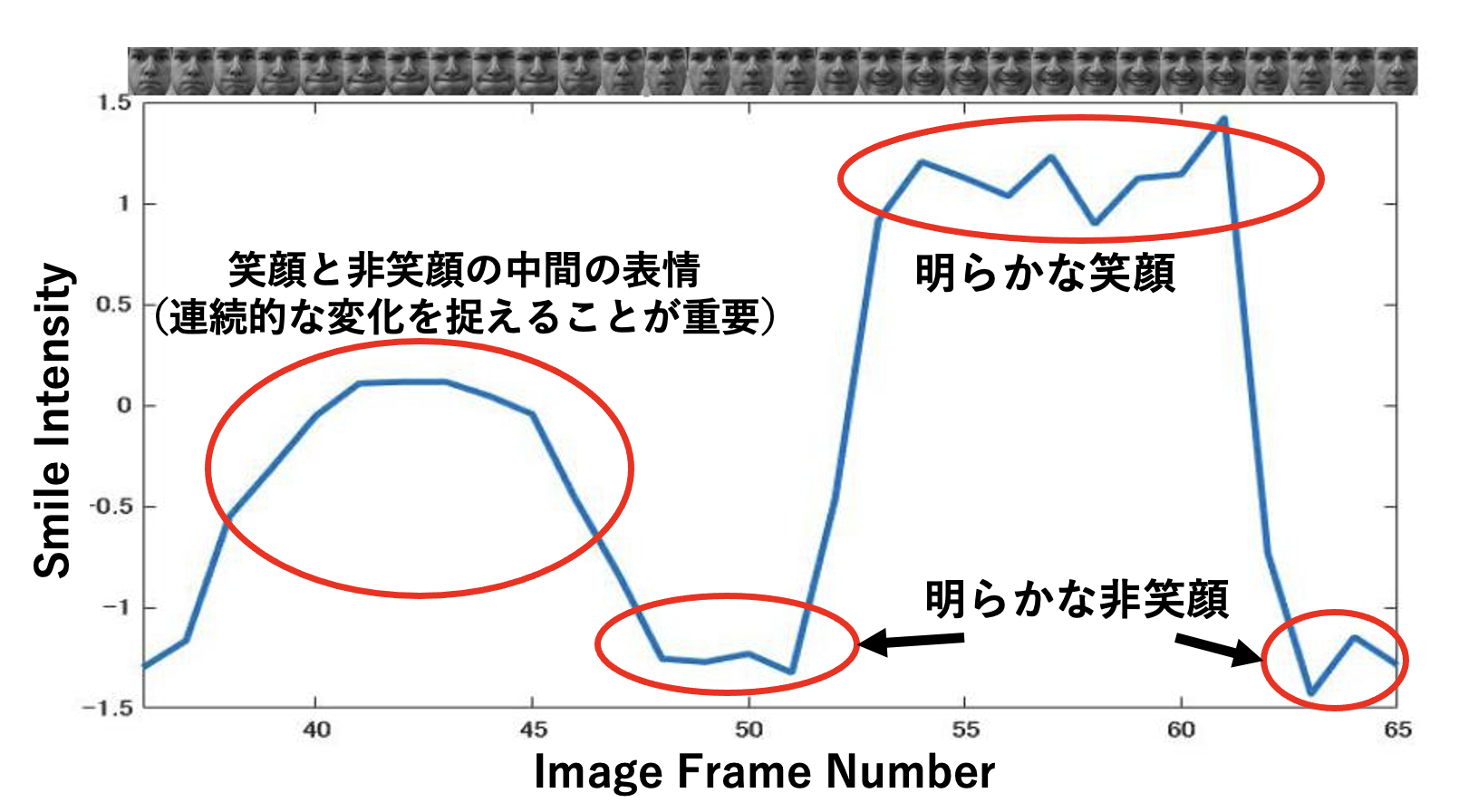

Specifically, we aim to build technologies such as sub-indication detection/prediction and atmosphere understanding. For this purpose, we will deal with multimodal recognition and prediction techniques through multimodal integration and multimodal disambiguation.

If such technologies can be developed, we believe that a Ba-aware system that highly friendly communicates and interacts system with people.

This is a new research direction in our laboratory. As a first step, we are currently researching sub-indication sensing technologies such as detecting only earthquakes and recognizing their magnitude from vibrometer data at a single location that includes observations of various vibrations, understanding the surrounding environment only from sound, and audio-visual scene reconstruction. We plan to work with HRI-JP on specific applications for our developing communication and interaction technology.

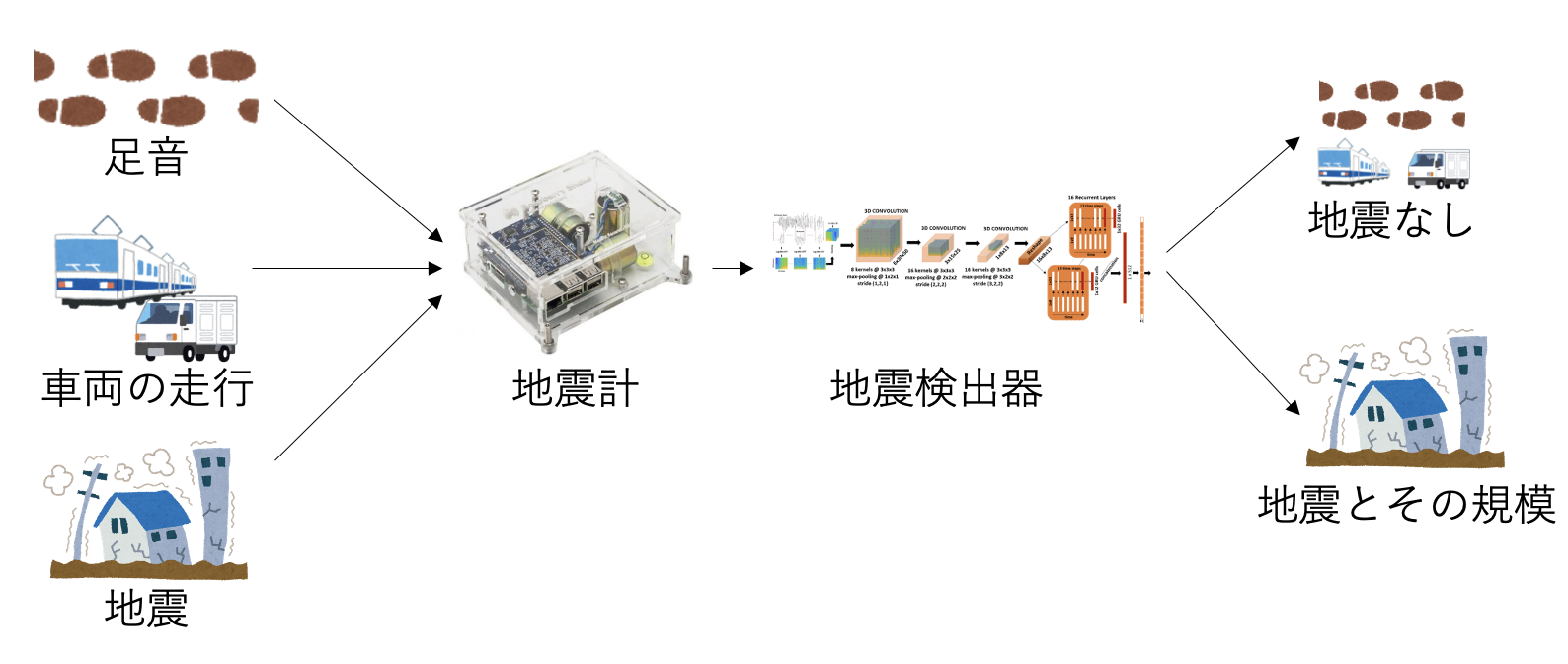

Earthquake detection and magnitude identification using one-base seismic data

Although earthquakes are detected from data from multiple locations where there are few observations of vibrations other than earthquakes, we have shown that by using deep learning techniques (RCNN), it is possible to detect earthquakes with their magnitudes to some extent even using vibrometer data at a single location where a variety of vibrations are measured, such as in a city center. (Insert Shakeel’s picture)

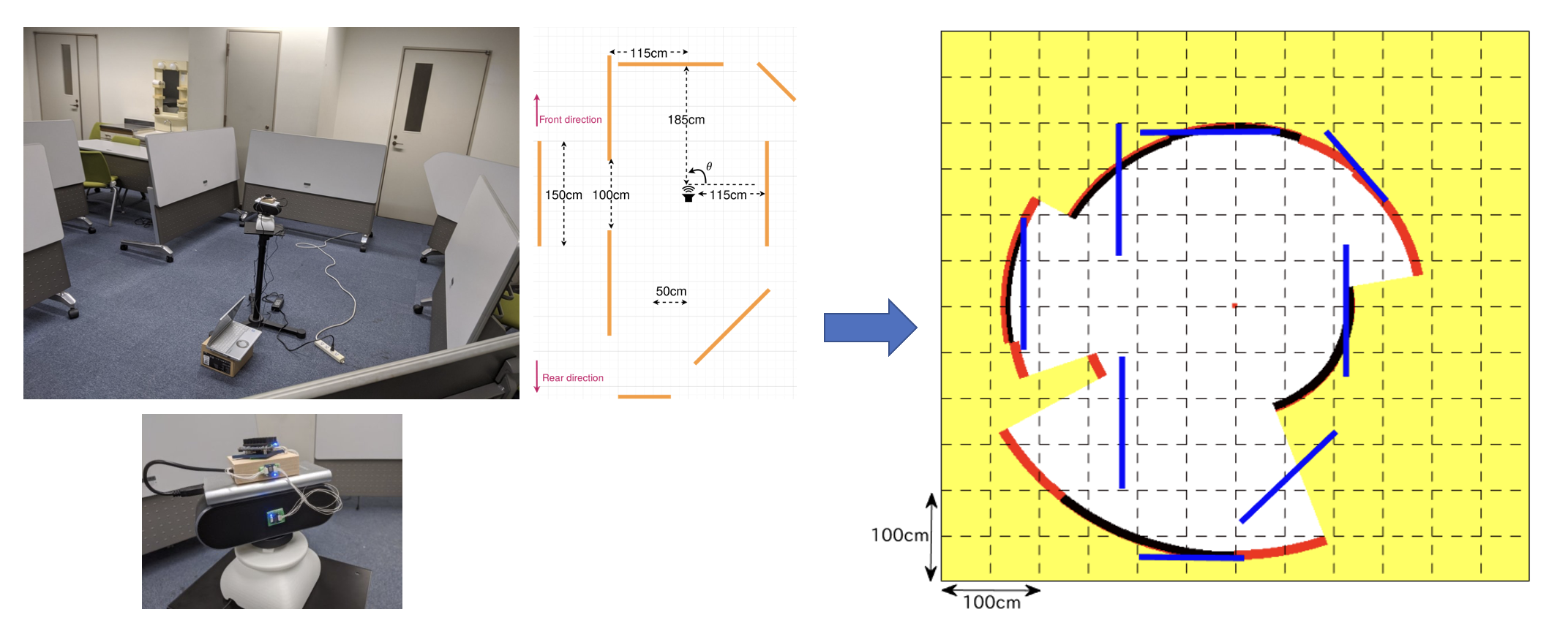

Map generation using audible sound

In acoustic measurements, ultrasonic waves are often used, but we are constructing a method to reconstruct the surrounding environment using audible sounds. It is known that people can train their echo-location ability to detect walls and obstacles only by their ears, which is sometimes helpful for visually-impaired. In order to build such a technology, we are working on map generation using audible sounds. (Insert Kishinami’s 2D picture)

Audio-visual scene reconstruction

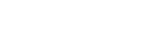

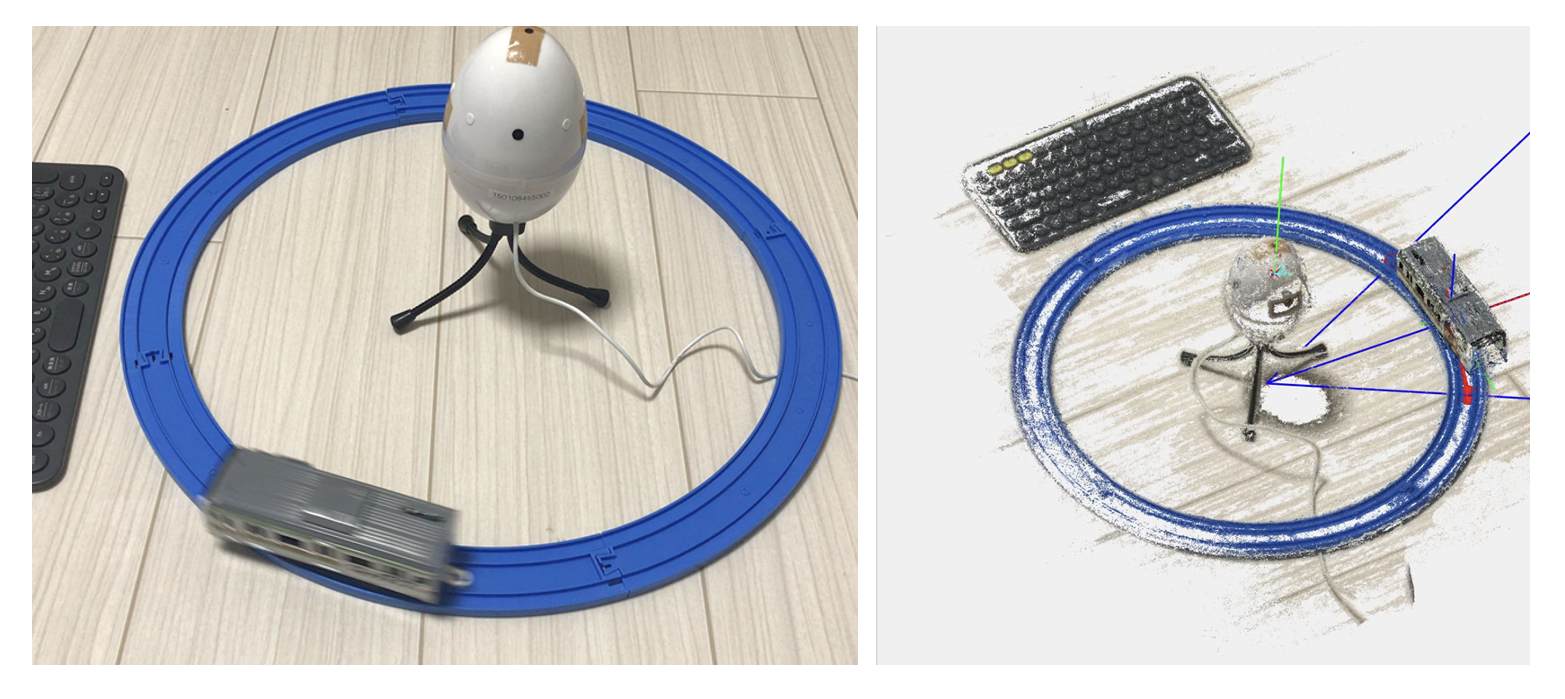

Audio-visual scene reconstruction. Only with audio or vision, it is difficult to understand the surrounding environment. We try to disambiguate such missing or ambiguous information from another modality Figure 1 is an example of 4D reconstruction of a scene with dynamic movement using audiovisual integration to alleviate the strong assumption that SfM only assumes static scenes. Figure 2 shows reconstruction of transparent objects which cannot reconstructed only with visual information.